Multiqueue-optimization

From KVM

Future Optimizations

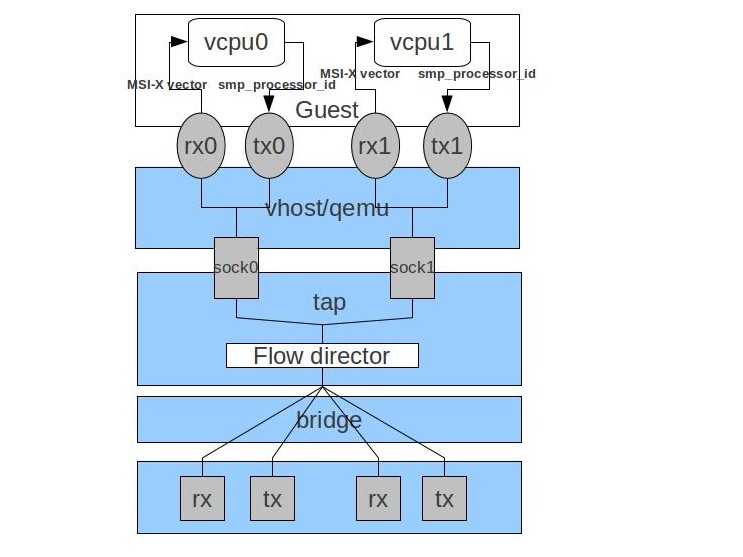

Per cpu queue

For better parallelism, per-cpu is needed. This could be done in by

- For backend(tap/macvtap): allocate as many queues as the number the vcpus

- For guest driver, do a 1:1 mapping between them, and make sure the handling of rx/tx queue pairs were done in the same vcpu:

- bind the irq affinity to that cpu

- use smp processor id to choose the tx queue when doing tx

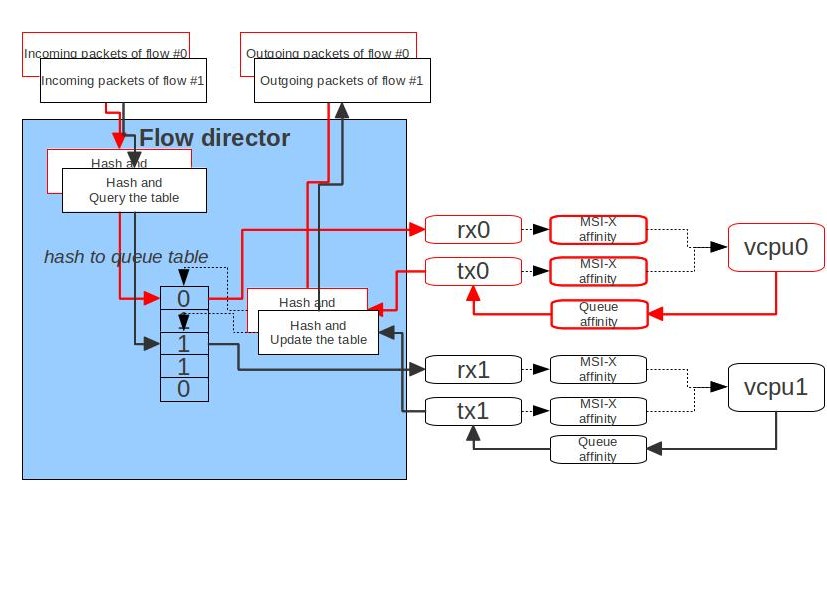

Flow director

For better stream protocol (like TCP) performance, we'd better make sure the packets of single flow were handle in the same on vcpu. This could be done through implement a simple flow director in tap/macvtap/

The flow director is a hash to queue table which use the lower bits of hash value of packets as its index to query which queues the flow belongs to. The table is build during guest transmit packets to backend (tap/macvtap), and it is queried when backend wants to choose a queue to transmit packet to guest. It works as follows:

- When virtio-net backend transmit packets to tap/macvtap, the hash of skbs were calculated, and its lower bits were used as index to store the queue no. in the table.

- When tap/macvtap want to transmit packets to guest, the lower bits of the skbs hash is used to query which queue/flow this packets belongs to and then put this packet to the queue.

The flow director also needs the co-operation of per-cpu queues to work.